The history and basic functionality of Artificial Intelligence (AI):

From programming to machine learning

The use of the term ‘Artificial Intelligence’ is often criticized because it is quite unclear what human intelligence exactly is and how it refers to attributes and functions of the human nervous system. From its beginnings computer science or informatics had been divided by different views. For one group of scholars the bases had been logic, and symbol transformation; computing had been derived from conscious human thinking and the idea to use computers to mimic human intelligence by itself had been not considered. Instead computers had been seen as an extension of human capabilities in regard of memory and capacity for logical sequences of operations, thus computers had not been seen as intelligent by themselves. But two scientific discoveries enabled another group of scholars to think differently about intelligence and computers, first a basic understanding of general functional principles of complex nervous systems inclusive of the human brain, e.g. learning or neural and synaptic plasticity described by Donald O. Hebb (1949).

The huge impact of Hebb on the interdisciplinary discussion about artificial intelligence might be based on the fact that his findings are easy to summarize through the (simplified) Hebbian (learning) rule: „neurons wire together if they fire together“. In principle it is a bold simplification to interpret neurons as electro-chemical switches but this view later on triggered the development of networks of electronic switches (transistors) parallel to each other, so called ‘perceptrons’ or one-layer neural networks as predecessors of nowadays convoluted neural networks. Perceptrons had been able to recognize different patterns, e.g. handwritten numbers and to transform into the correct input for computer-based data processing. (cf Seijnowski, T., 2018, The deep learning revolution, San Diego, Cal.)

Another decisive step towards a serious scientific discussion of ‘Artificial Intelligence’ or ‘brain style computing’ had been the seminal paper of Alan Turing on ‘Computing, Machinery, and Intelligence’ (1950) proposing to consider the question: Can machines think? On page 19 Turing noted his view that that computers might be able to learn in a similar way like children do:

- “Structure of the Child Machine = Hereditary Material

- Changes of the Child Machine = Mutation

- Natural Selection = Judgment of the Experimenter”

- (Turing, A., 1950, p. 19)

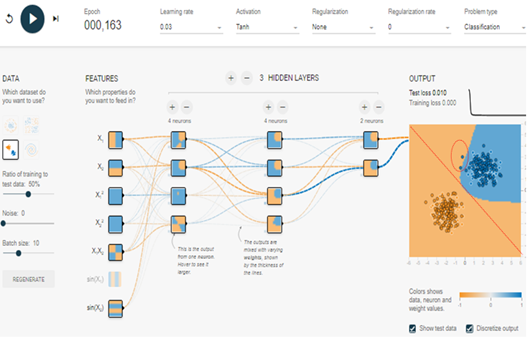

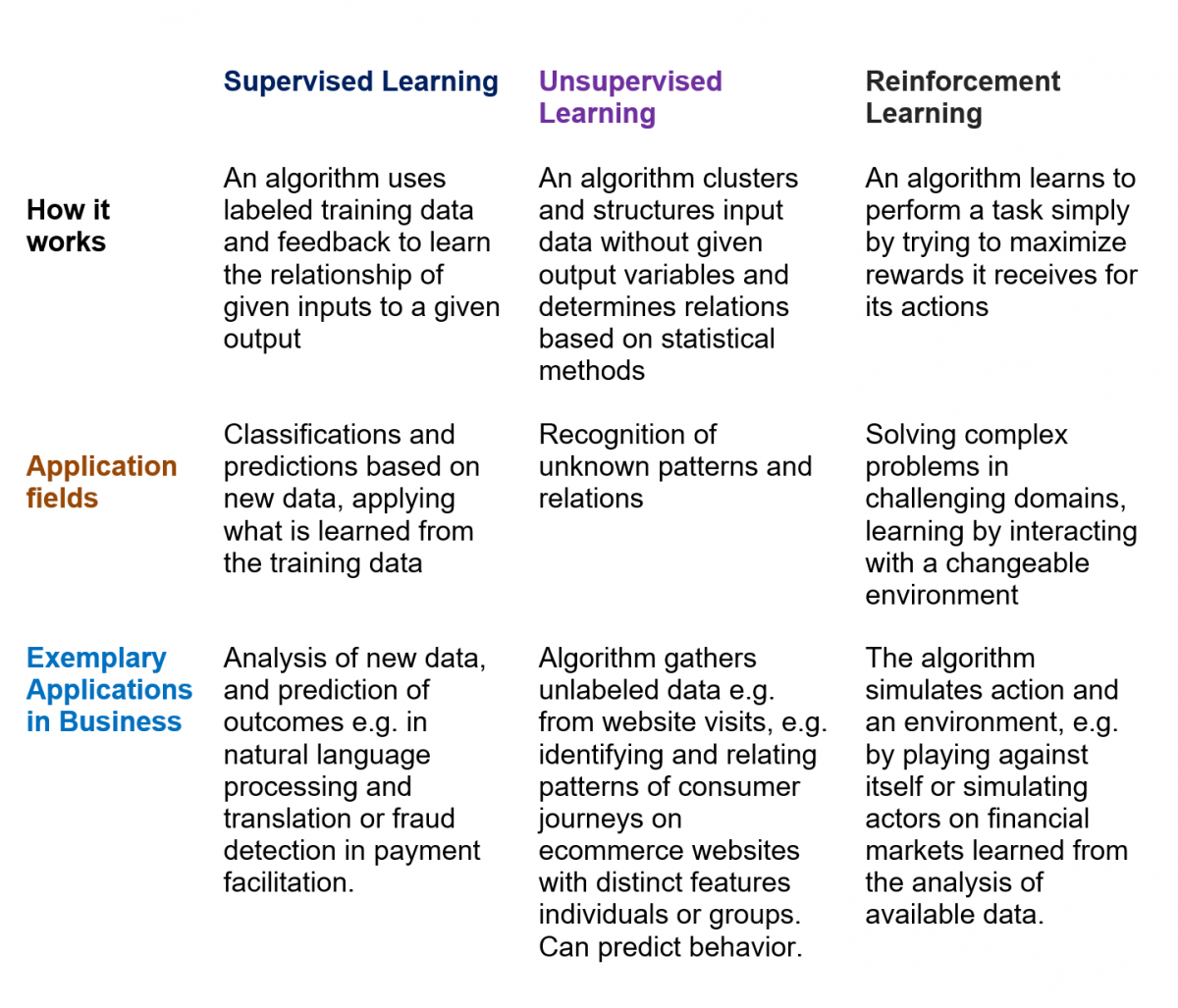

Indeed, nowadays machine learning as the base of the development of artificial intelligence are following the evolutionary principles outlined by Turing and Hebb, multilayered complex neural networks are mimicking structures of the human pre-frontal cortex (initial algorithms) e.g. responsible for the recognition of patterns and objects. Random variations (mutations) in weighing the sensor inputs through neurons (transistors) and interference of the experimenter (or teacher), judging right or wrong are the principle factors to achieve improved results (better trained algorithms) or more intelligent behavior of the neural network. This simple process is called supervised learning and may be compared with training or schooling. The figure below displays a simple four layer neural network for supervised machine learning. Supervised learning means that the network is trained by a set of training data labeled by humans e.g. by pictures from a publicly available data base ImageNet containing more than 14 Million pictures with labeled objects suitable to train algorithms of neural networks in classifying objects as cars, bicycles, motorcycles, pedestrians, children or traffic lights, fire hydrants etc. which of course is vital for the development of driving assistance systems.